Brain study finds circuits that may help you keep your cool

The big day has come: You are taking your road test to get your driver’s license. As you start your mom’s car with a stern-faced evaluator in the passenger seat, you know you’ll need to be alert but not so excited that you make mistakes. Even if you are simultaneously sleep-deprived and full of nervous energy, you need your brain to moderate your level of arousal so that you do your best.

Now a new study by neuroscientists at MIT’s Picower Institute for Learning and Memory might help to explain how the brain strikes that balance.

“Human beings perform optimally at an intermediate level of alertness and arousal, where they are attending to appropriate stimuli rather than being either anxious or somnolent,” says Mriganka Sur, the Paul and Lilah E. Newton Professor in the Department of Brain and Cognitive Sciences. “But how does this come about?”

Postdoc Vincent Breton-Provencher brought this question to the lab and led the study published Jan. 14 in Nature Neuroscience. In a series of experiments in mice, he shows how connections from around the mammalian brain stimulate two key cell types in a region called the locus coeruleus (LC) to moderate arousal in two different ways. A region particularly involved in exerting one means of this calming influence, the prefrontal cortex, is a center of executive function, which suggests there may indeed be a circuit for the brain to attempt conscious control of arousal.

“We know, and a mouse knows, too, that to counter anxiety or excessive arousal one needs a higher level cognitive input,” says Sur, the study’s senior author.

By explaining more about how the brain keeps arousal in check, Sur said, the study might also provide insight into the neural mechanisms contributing to anxiety or chronic stress, in which arousal appears insufficiently controlled. It might also provide greater mechanistic understanding of why cognitive behavioral therapy can help patients manage anxiety, Sur adds.

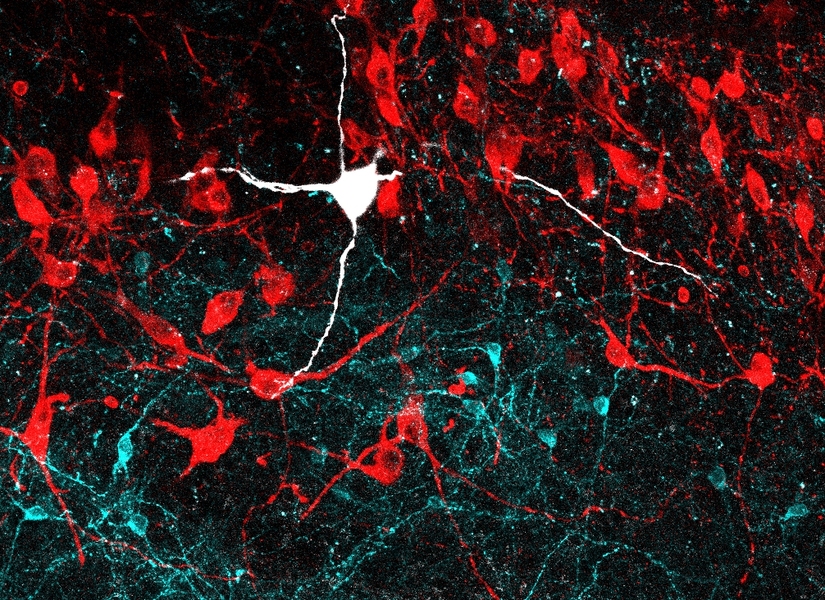

Crucial characters in the story are neurons that release the neurotransmitter GABA, which has an inhibitory effect on the activity of receiving neurons. Before this study, according to Breton-Provencher and Sur, no one had ever studied the location and function of these neurons in the LC, which neurons connect to them, and how they might inhibit arousal. But because Breton-Provencher came to the Sur lab curious about how arousal is managed, he was destined to learn much about LC-GABA neurons.

One of the first things he observed was that LC-GABA neurons were located within the LC in close proximity to neurons that release noradrenaline (NA), which stimulates arousal. He was also able to show that the LC-GABA neurons connect to the LC-NA neurons. This suggested that GABA may inhibit NA release.

Breton-Provencher tested this directly by making a series of measurements in mice. Watching the LC work under a two-photon microscope, he observed, as expected, that LC-NA neuron activity precedes arousal, which was indicated by the pupil size of the mice — the more excited the mouse, the wider the pupil. He was even able to take direct control of this by engineering LC-NA cells to be controlled with pulses of light, a technique called optogenetics. He also took over LC-GABA neurons this way and observed that if he cranked those up, then he could suppress arousal, and therefore pupil size.

The next question was which cells in which regions of the brain provide input to these LC cells. Using neural circuit tracing techniques, Breton-Provencher saw that cells in nearly 50 regions connected into the LC cells, and most of them connected to both the LC-NA and the LC-GABA neurons. But there were variations in the extent of overlap that turned out to be crucial.

Breton-Provencher continued his work by exposing mice to arousal-inducing beeps of sound, while he watched activity among the cells in the LC. Making detailed measurements of the correlation between neural activity and arousal, he was able to see that the LC is actually home to two different kinds of inhibitory control.

One type came about from those inputs — for instance from sensory processing circuits — that simultaneously connected into LC-GABA and LC-NA neurons. In that case, optogenetically inducing LC-GABA activity would moderate the mouse’s pupil dilation response to the loudness of the stimulating beep. The other type came about from inputs, notably including from the prefrontal cortex, that only connected into LC-GABA, but not LC-NA neurons. In that case, LC-GABA activity correlated with an overall reduced amount of arousal, independent of how startling the individual beeps were.

In other words, input into both LC-NA and LC-GABA neurons by simultaneous connections kept arousal in check during a specific stimulus, while input just to LC-GABA neurons maintained a more general level of calm.

In new research, Sur and Breton-Provencher say they are interested in examining the activity of LC-NA cells in other behavioral situations. They are also curious to learn whether early life stress in mouse models affects the development of the LC’s arousal control circuitry such that individuals could become at greater risk for chronic stress in adulthood.

The study was funded by the National Institutes of Health, postdoctoral fellowship funding from the Fonds de recherche du Québec, the Natural Sciences and Engineering Research Council of Canada, and the JPB Foundation.

By: David Orenstein | Picower Institute for Learning and Memory - January 15, 2019